Introduction

This

exercise is part 2 of the ArcPad Data Collection exercise. Part 1 of the

exercise can be seen here. The goal of the exercise for our group was to

capture points as part of a Micro-Climate survey. We were to collected data on Wind Speed, Dew

Point, Humidity, Windchill, Ground Cover, Temp at Surface, Temp at 2 meters, as

well as notes. We were also supposed to

gather data on Wind Direction but most groups, including ours, did not have a

compass. The data from one group that

did collect Wind Direction data can be seen in the results section as a smaller

map.

Once all the data was

collected we joined all the data

together to form a single feature class.

After this we were able to go to town mapping out the data.

Study Area

Our Study Area was on the

University of Wisconsin-Eau Claire campus in Eau Claire, Wisconsin. The campus

is located near the center of the city, and is bisected by the Chippewa

river. Figure 1

shows a map of the Eau Claire campus. Figure 2 shows the zones that we

collected data from. Our group,

consisting of Galen Kiely and Nick Bartelt was given the zone at the top of the

map, including the footbridge.

|

| Figure 1. This map shows the University of Wisconsin - Eau Claire. Most buildings are labeled. This is actually a map of the wireless coverage on campus, but it gets the job done very well. |

The weather on the day we

collected was very nice for early March.

The previous few days had seen temperatures in the upper 40’s rising

into the 50’s. The sun had melted most of

the snow cover, but there were still areas that were heavily shaded that had

not melted. There were also several

areas around campus that snow had been piled over the course of the winter, and

there was still ice on the banks of the Chippewa river.

As part of our preperation

for this exercise we created a Geodatabase with Domains and Subtypes in

Exercise 5. This link takes you to the tutorial from Exercise 5. As part of that we were required to estimate the

ranges of data that we would collect. We estimated the range of possible temperatures

at -30F to 60F. At the time most thought

we would be closer to the former than the latter. As it turned out our group and possibly

another group collected at least one sample data point above 60F.

Methods

Following on Exercise 5

and Exercise 6,

we began by deploying the geodatabase, this process is covered in the methods

section of exercise 6. When we confirmed

successful deployment we headed out to our assigned zone.

Each group was given a

Kestral 3000 Weather Meter. Simple to

operate, the Kestrel 3000 allows for the easy collection of weather data,

including the features shown in figure 3.

|

| Figure 3. Kestrel Weather Meter. These were used to collect data on Humidity, Temperature at Surface, Temperature at two meters, Dew Point, Wind Chill, and Wind Speed during the survey. |

|

| Figure 4. This shows the capabilities of the Kestrel unit, as well as some basic operations. We printed out a copy of these so we could remember what the symbols meant, very useful. |

We worked as a team with one

person using the Trimble Juno and the other on the Kestrel. One thing we noted was that it took longer to

collect all the data than we had thought it would take. The Kestrel instructions recommend about 15

seconds for an accurate reading. Since

we were taking two separate temp readings, one at the height of two meters and

another at the surface it took about 1.5 minutes to collect each point,

including notes. We also noted that the

longer we held the unit near the surface, the more the temperature would

change. This could have many

implications for the data, but it should still fit our purposes.

We ended up collecting around

55 points in the time we had. We began

on the south side of the bridge collecting points every few meters. The average

wind speed on the bridge was 5.8mph. We then walked along the sidewalk path

toward the Water St./ Summit Ave. Bridge, then turned and followed the bike

path back under the foot bridge. We

noted that there was a temperature difference of several degrees between the

bridge and the path underneath. Once we

came near the HHS building, we turned and went north around the Haas parking

lot. We walked and collected points all

around the Haas Fine Arts building. The

average wind speed nearer to the building was less than 3mph, this is partly

due to the wind screen provided by the trees along the river. After completing a route around the Haas

building and back to the parking lot and band practice field we headed back

across the bridge. As we headed back

across the bridge, we collected a few more points and noted that the

temperature over the two hour period had changed by 5 degrees at the surface

and 3 degrees at two meters.

Once back in the lab we

followed the import procedures from exercise 6 to check the data back in. After all the groups returned, all the points

were joined together using the Append tool.

The Append tool allows multiple data sets to be merged into a single

data set. Now that all the data is merged

we can begin interpolating the data and making maps.

Results

For more discussion of the

interpolation process, the methods used, and the benefits of the various types

of Interpolation, see Exercise 2. I used the Inverse-Distance Weighting

Interpolation method. This method uses

distance as a factor to calculate the influence of each point, with nearer

points having more influence than far away points.

The first map, figure 5 shows all the points on a background image of

the UWEC campus. It gives a good idea of

the scope of the survey that was done.

Next is a map of Dew Point, figure 6. Dew

Point is the temperature below which water vapor condenses to form Dew. The pattern seems to show clusters of higher

DP along Little Niagara creek that runs between upper and lower campus.

|

| Figure 6. This map displays a IDW Interpolation of the points. Low, damp areas seemed to have the highest Dew Point readings. |

Figure 7 is a map of Wind speed. The pattern indicates higher wind on Upper

campus around the dorms and parking lots, and also along the footbridge and

river. This seems consistent with what

would be expected.

|

| Figure 7. This map shows an IDW Interpolation of the Wind Speed data that was collected by the groups. As would be expected the higher, more open areas show more wind activity. |

Figure 8 shows the temperature at a height of two meters. Pattern shows lower temps in the wooded area

along Little Niagara Creek, and near the river.

Many areas of higher temperature can be seen in areas shielded by

buildings where wind could be lower, but the sun is still bright.

Figure 9 is a map of the difference between the temperature at

2 meters and the temperature at the surface.

The differences range from -9.9 to 2 degrees. This means that in some areas the surface

temp is nearly 10 degrees lower than the temp at 2 meters. This map can be

somewhat deceptive in that, if you removed the two highest and lowest points,

the variation would be less than 4 degrees.

There are also gaps in the data, since one group didn't collect

temperature at meter data.

Figure 10 displays the surface temperature minus the wind chill. Windchill is used as a measure of heat loss

lost by a body, but is sometimes referred to as the “feels like”

temperature. The map is fairly similar

to the temperature map, but there are patterns that can be seen.

|

| Figure 10. This map show the Wind Chill subtracted from the Surface Temperature. It was created using the same method as figure 9. |

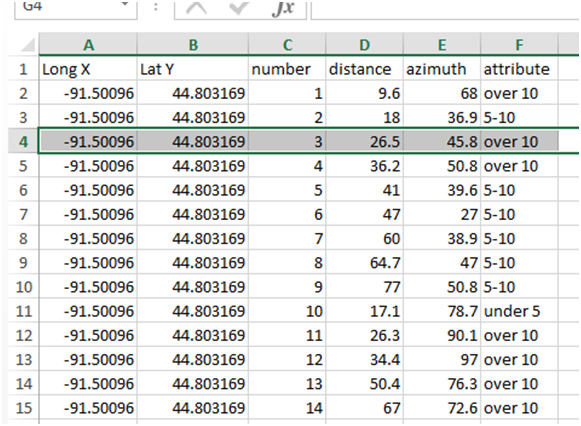

Figure 11 is a little different from the other maps. Since only one group collected data on wind

direction, this map shows only a small section of the study area. The MPH is indicated by the size of the

symbol, and direction is indicated by the orientation of the symbol. Since this is not a common feature on maps,

it took a little digging to find the options to make it happen.

|

| Figure 11. This micro-micro climate survey shows the Wind Speed and Wind Direction. Without compasses, most groups were unable to collect Wind Direction data. One group did, and this is the result. |

Once I selected out the

points that had directions data, opened layer properties. Under the Symbology tab, I selected

Proportional Symbols under the Quantities (see figure 11). This allows symbols to be relative to the a

selected field, in this case Wind Speed.

Next I clicked the rotation button.

This brings up the dialogue on the right side of figure 11. This allows the rotation of the symbol by a

data field, in this case wind_direction.

It also took a little work to get the symbol to work. This symbol is

actually used for Dams on maps. In order

to use it as an arrow I clicked on the Min Value button which brings the

dialogue in figure 12. Here I selected

the color and size. I also selected the

default angle, 180 degrees from the original position in this case.

So now each symbol shows the wind direction and is proportional to the

speed.

Conclusions

The objective was to spend time in the field using the Geodatabase Domains and Subtypes that we created in previous exercises. This gave us valuable time to gain understanding of what could be done better if there were to be a follow-up exercise, or if we were planning our ow survey at a future job. We also gained more time on some field equipment, the Trimble Juno, and the Kestrel 3000 Weather Meter. We succeeded in collecting data in the field, returning it to the lab for import and analysis. While we have done similar exercises in GIS 1 and Geog 200, I felt this was a much more in-depth process from start to finish; Geodatabase and domain creation to exporting a finished map.

.png)